Database normalization is typically a good thing. It helps reduce the amount of errors in a database by having important information only stored in one place.It maintains referential integrity and helps prevent what are known as insertion and deletion anomalies. It allows the database design to accommodate future expansions of data storage requirements and makes it easy to retrieve data through a language such as SQL. There are various levels, or forms of normalization. The most common in average database usage is the third normal form. Although DBAs may not be familiar with the terminology of normalization, I think most can recognize it when they see it. They are also probably likely to be able to design a normalized database (well, most senior DBAs anyway) and to write queries against one. But once in a while, you’ll run up against a programmer that either doesn’t understand the concept of normalization or who knows a little bit about it and takes to to extremes. I ran across one of these today.

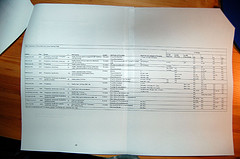

Well, I didn’t actually meet the guy (or gal) in person. But I did come across their database design. Check out this portion of the design:

Clearly, the designer had some inkling of normalization. Putting the states and countries into their own table is proper use of normalization. If the tblAddress table contains the addresses of 2 million people living in California, there’s no need to store “California” and “United States Of America” 2 million times. Put that data in another table once and store a pointer to it (in the form of a foreign key) in the address table. This also prevents addresses from having state values of “CA”, “Calif”, “Californa”, and the like.

But this designer took normalization one step too far. He (or she) put the street name into its own table. Now, I will give the designer props for separating out the number portion of the address (vcStreetNO) from the street name (vcStreet), but really, this is not an appropriate use of normalization. And yes, there are probably lots of “Main Streets” in California, so technically, you may save a few bytes here and there, if you looked for duplicates. But how will this table be maintained? If someone needs to enter a new address, you can reasonably present them with a list of all the states and countries from those tables to choose from. But you are you going to list all the streets in California for them to select from? No way. So you are most likely going to let the user type in some text for the street address then insert that data into the tblStreet table. And I’d bet willing to bet you’re not going to check to see if that street already exists in the table. Even if you did, when you consider the possibilities of street names including “North”, “East”, “N.” etc. (not to mention user typos) you’ll really reduce the number of potential matches you might find. This defeats one of the purposes of normalizing data – to minimize storing duplicate data.

This is a just a bad design. It serves as an example of why DBAs should be at least somewhat involved in the database design process.