In this post, I walk through the steps of basic index analysis and, although we end up with a fairly obvious solution, we discover that optimizing SQL Server performance sometimes doesn’t even involve SQL Server.

One of the occupation hazards, if you will, of being a DBA, is that you tend to think about possible database applications or configurations in the various encounters you have in everyday life. (Or maybe that’s just me.) I’ve already written about how I’ve thought about how databases could be used to improve the bottom line of casinos. Along those same lines, I’ve also been intrigued by the databases behind state and multi-state lotteries, particularly, the Powerball lottery. (Background: to win the Powerball Jackpot, you need to pick 5 distinct numbers between 1 and 59 plus one “bonus” number between 1 and 39. If all these numbers match the randomly chosen numbers, you win the Jackpot. The order of the first 5 numbers does not matter. Drawings are twice a week and each entry costs one dollar.)

I’ve often wondered why, after the winning numbers have been picked, that it is not until the next day that we find out if a winning ticket had been sold. I had a hard time believing it would take a computer all night to search the database of tickets sold to see if there was a match. Now, keep in mind, I’m not talking about checking if a specific ticket is a winner. That’s a relatively trivial task of comparing one set of six numbers against one other set of six numbers. What I am interested in is the searching of the entire dataset of tickets sold (millions of records) for matches to the actual random numbers drawn (one record).

In preparing to write this post, I made some discoveries about Powerball that has somewhat diminished my curiosity. For example, the official Powerball site states that each state lottery maintains its own central computer system. So even though there are 44 different states participating, there is not one central database of tickets sold. There are at least 44 databases. This makes searches much simpler as the number of tickets to be searched is greatly reduced. Rather than one huge database of tickets to check, there are lots of smaller databases to check. This is likely the reason for the overnight delay in reporting if a winning jackpot ticket was sold – results from the various states have to be gathered and collated. I would argue this could still be done quickly by a computer, but given the various different state laws and large amounts of money at stake, there are probably procedures in place to prevent fraud and following those are what takes time.

Anyway, even though the actual system likely uses smaller databases than I first imagined, I still wanted to get an idea of how one would go about indexing a lottery ticket database for quick searches. The bonus number, since it is a single number matched by itself, would seem an obvious choice to have its own index. But what about the other 5 numbers? Should there be an index on each one or one single index containing each value? I needed to run some tests. And the first step in doing that is to get some test data.

How many Powerball tickets are sold? This site gives the total Powerball sales for each drawing for 2010. Averaging the data (only from Feb 2010 (when the number of states participating changed) to mid-December, when I am writing this), gives us a figure of about $28 million in sales per drawing. Tickets cost one dollar each which gives us 28 million tickets sold. Because each state uses its own computer system, we need to translate this to a per state figure. There are 44 states participating, so that works out to roughly 636,000 tickets sold per state. That’s a huge approximation that assumes an even distribution of sales, which is obviously not correct. A populated state such as New York will sell many more tickets than a sparsely populated one like Montana. But I’m not looking for real-world modeling here, just an in-the-ballpark estimate. Fewer rows are easier to search and I’m interested in searching large tables, so let’s assume we are looking at a relatively populous state and go with a figure of 3 million tickets sold per drawing.

The next step is to create a table to hold these records. Below is the code to create such a table. The table includes a field for the drawing date, but, after a bit more thinking, I’ve decided this probably isn’t needed. Given the amount of tickets sold, in the real world, it would probably make more sense and provide better performance if the data for each drawing was held in its own table. I added a primary key field as a clustered index as well.

CREATE TABLE [dbo].[TicketsSold]( [PK] [int] IDENTITY(1,1) NOT NULL, [Number1] [tinyint] NOT NULL, [Number2] [tinyint] NOT NULL, [Number3] [tinyint] NOT NULL, [Number4] [tinyint] NOT NULL, [Number5] [tinyint] NOT NULL, [BonusNumber] [tinyint] NOT NULL, [DrawingDate] [date] NOT NULL, CONSTRAINT [PK_TicketsSold] PRIMARY KEY CLUSTERED ( [PK] ASC )WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, IGNORE_DUP_KEY = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON, FILLFACTOR = 90) ON [PRIMARY] ) ON [PRIMARY] GO ALTER TABLE [dbo].[TicketsSold] ADD CONSTRAINT [DF_TicketsSold_DrawingDate] DEFAULT (getdate()) FOR [DrawingDate] GO

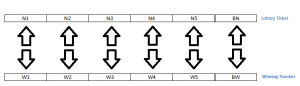

Next, it was time to write the code that would generate 3 million random sets of numbers. I should point out that I’m not too concerned with whether the numbers are truly random or not. SQL Server’s pseudo-random number algorithm should be fine for my purposes. I also spent some time thinking about the next step – searching the data. Because the order of the first five numbers does not matter, a simple comparison was out of the question. Five numbers can be arranged in 5!, or 120, different ways. Writing code to check all of those would be horrible and performance would be equally bad. Knowing that others smarter and with more time than me have faced this problem, I thought about how they might have approached this problem. If you’ve ever bought a Powerball or lottery ticket, you may have noticed that your numbers are printed out on the ticket in ascending order. So even if you pick random numbers, they still appear on the ticket as an ordered set. I had always thought this was just for convenience or possibly just a little quirk of whoever programmed the system originally, but now I see there is actually a reason behind this. If you order them this way (and store them in the database this way), you dramatically reduce the number of combinations you have to check. (I should note here that, for the purposes of this exercise, I am only concerned about matching all 6 numbers. In Powerball, you can actually win a prize if you match as few as 1 number plus the bonus number or the bonus number alone. I’m certain that ordering the numbers will help in finding matches for these cases as well, but that would likely involve a bit fancier algorithms than I am going to develop here.) If the numbers are ordered from smallest to biggest (or vice-versa), provided you order the winning number the same way, all you have to do to find a jackpot winner is match each number in turn:

If any number doesn’t match, the ticket is not a jackpot winner.

The Details

Here’s the code to generate test data. It will make 3 million rows, sort each group of 5 numbers, and write it to the table. Sorting was done using a table variable. I wrote five rows to the table, each a random number. I then popped them off the top of the table and assigned them to variables using the TOP 1 and ORDER BY clauses of a SELECT statement to perform the sorting. This was easier for me than trying to code some string sorting function in T-SQL. Again, I’m not concerned about performance here. This is a script that will be run once, so I can live with any inefficiencies in it.

/* Generate test Powerball data */ /* shaunjstuart.com */ SET NOCOUNT on DECLARE @Number1 tinyint DECLARE @Number2 tinyint DECLARE @Number3 tinyint DECLARE @Number4 tinyint DECLARE @Number5 tinyint DECLARE @BonusNumber tinyint DECLARE @LoopCounter int = 1 DECLARE @SortingTable table (Number tinyint) WHILE @LoopCounter <=3000000 BEGIN DECLARE @maxRandomValue TINYINT = 59 DECLARE @minRandomValue TINYINT = 1 DECLARE @Counter tinyint = 1 DECLARE @RandomNumber tinyint SET @Number1 = 0 SET @Number2 = 0 SET @Number3 = 0 SET @Number4 = 0 SET @Number5 = 0 SET @BonusNumber = 0 WHILE @Counter <=5 BEGIN SELECT @RandomNumber = CAST(((@maxRandomValue + 1) - @minRandomValue) * RAND() + @minRandomValue AS TINYINT) IF NOT EXISTS (SELECT 1 FROM @SortingTable WHERE Number = @RandomNumber) /* duplicate numbers are not allowed */ BEGIN INSERT INTO @SortingTable (Number) VALUES (@RandomNumber) SELECT @Counter = @Counter +1 END END SELECT @maxRandomValue = 39 SELECT @BonusNumber = CAST(((@maxRandomValue + 1) - @minRandomValue) * RAND() + @minRandomValue AS TINYINT) /* Pull numbers out again, this time sorted */ SELECT TOP 1 @Number1 = Number FROM @SortingTable ORDER BY Number asc DELETE FROM @SortingTable WHERE Number = @Number1 SELECT TOP 1 @Number2 = Number FROM @SortingTable ORDER BY Number asc DELETE FROM @SortingTable WHERE Number = @Number2 SELECT TOP 1 @Number3 = Number FROM @SortingTable ORDER BY Number asc DELETE FROM @SortingTable WHERE Number = @Number3 SELECT TOP 1 @Number4 = Number FROM @SortingTable ORDER BY Number asc DELETE FROM @SortingTable WHERE Number = @Number4 SELECT TOP 1 @Number5 = Number FROM @SortingTable ORDER BY Number asc DELETE FROM @SortingTable WHERE Number = @Number5 INSERT INTO TicketsSold (Number1, Number2, Number3, Number4, Number5, BonusNumber, DrawingDate) VALUES (@Number1, @Number2, @Number3, @Number4, @Number5, @BonusNumber, '8/18/2011') SET @LoopCounter = @LoopCounter + 1 END

So let’s start our index analysis! First, we said we’d start with an index on the bonus number only. Here is the code to create that:

CREATE NONCLUSTERED INDEX [IX_BonusOnly] ON [dbo].[TicketsSold] ( [BonusNumber] ASC )WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, SORT_IN_TEMPDB = OFF, IGNORE_DUP_KEY = OFF, DROP_EXISTING = OFF, ONLINE = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON, FILLFACTOR = 90) ON [PRIMARY] GO

Here’s the code that I will use to search for a winning jackpot ticket. The hard coded numbers were initially generated randomly, but I wanted to keep them the same for my various test cases. I sorted the digits in ascending order using the same method as before.

/* Powerball ticket matching code */ /* shaunjstuart.com */ DECLARE @MyNumber1 tinyint DECLARE @MyNumber2 tinyint DECLARE @MyNumber3 tinyint DECLARE @MyNumber4 tinyint DECLARE @MyNumber5 tinyint DECLARE @MyBonusNumber tinyint SET @MyNumber1 = 31 SET @MyNumber2 = 8 SET @MyNumber3 = 14 SET @MyNumber4 = 28 SET @MyNumber5 = 53 SET @MyBonusNumber = 39 /* Put winning ticket numbers in order */ DECLARE @SortingTable table (Number tinyint) INSERT INTO @SortingTable (Number) VALUES (@MyNumber1) INSERT INTO @SortingTable (Number) VALUES (@MyNumber2) INSERT INTO @SortingTable (Number) VALUES (@MyNumber3) INSERT INTO @SortingTable (Number) VALUES (@MyNumber4) INSERT INTO @SortingTable (Number) VALUES (@MyNumber5) SELECT TOP 1 @MyNumber1 = Number FROM @SortingTable ORDER BY Number asc DELETE FROM @SortingTable WHERE Number = @MyNumber1 SELECT TOP 1 @MyNumber2 = Number FROM @SortingTable ORDER BY Number asc DELETE FROM @SortingTable WHERE Number = @MyNumber2 SELECT TOP 1 @MyNumber3 = Number FROM @SortingTable ORDER BY Number asc DELETE FROM @SortingTable WHERE Number = @MyNumber3 SELECT TOP 1 @MyNumber4 = Number FROM @SortingTable ORDER BY Number asc DELETE FROM @SortingTable WHERE Number = @MyNumber4 SELECT TOP 1 @MyNumber5 = Number FROM @SortingTable ORDER BY Number asc DELETE FROM @SortingTable WHERE Number = @MyNumber5 /* Now do the actual search, keeping track of the time it takes */ SET STATISTICS TIME ON SELECT 'WinningTicketFound!! Primary key=' + convert(varchar(10),PK) FROM TicketsSold WHERE Number1 = @MyNumber1 AND Number2 = @MyNumber2 AND Number3 = @MyNumber3 AND Number4 = @MyNumber4 AND Number5 = @MyNumber5 AND BonusNumber = @MyBonusNumber SET STATISTICS TIME OFF

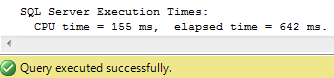

I think everyone can agree that without any additional indexes, performance will suck. But just for giggles and later comparison, let’s try running the search right now. Here is the estimated execution plan (only the final SELECT statement).

So without any additional indexes, SQL has to perform a clustered index scan. The total cost (not shown) for this SELECT statement is 11.645. An interesting thing to note: the index on the bonus number was not used. I ran the results, instructing SSMS to show the actual execution plan with the results, just to make sure it used the same one it estimated. It did. (It found no matches to my ticket, so I didn’t win millions.) Here are the execution times:

Let’s see what happens when we add an index to each field.

CREATE NONCLUSTERED INDEX [IX_Number1] ON [dbo].[TicketsSold] ( [Number1] ASC )WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, SORT_IN_TEMPDB = OFF, IGNORE_DUP_KEY = OFF, DROP_EXISTING = OFF, ONLINE = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON, FILLFACTOR = 90) ON [PRIMARY] GO CREATE NONCLUSTERED INDEX [IX_Number2] ON [dbo].[TicketsSold] ( [Number2] ASC )WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, SORT_IN_TEMPDB = OFF, IGNORE_DUP_KEY = OFF, DROP_EXISTING = OFF, ONLINE = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON, FILLFACTOR = 90) ON [PRIMARY] GO CREATE NONCLUSTERED INDEX [IX_Number3] ON [dbo].[TicketsSold] ( [Number3] ASC )WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, SORT_IN_TEMPDB = OFF, IGNORE_DUP_KEY = OFF, DROP_EXISTING = OFF, ONLINE = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON, FILLFACTOR = 90) ON [PRIMARY] GO CREATE NONCLUSTERED INDEX [IX_Number4] ON [dbo].[TicketsSold] ( [Number4] ASC )WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, SORT_IN_TEMPDB = OFF, IGNORE_DUP_KEY = OFF, DROP_EXISTING = OFF, ONLINE = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON, FILLFACTOR = 90) ON [PRIMARY] GO CREATE NONCLUSTERED INDEX [IX_Number5] ON [dbo].[TicketsSold] ( [Number5] ASC )WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, SORT_IN_TEMPDB = OFF, IGNORE_DUP_KEY = OFF, DROP_EXISTING = OFF, ONLINE = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON, FILLFACTOR = 90) ON [PRIMARY] GO

Before testing again, let’s clear the caches so we get data that isn’t influenced by any plans that SQL Server might have cached. Note: be sure you are doing this on a test server. You don’t want run the following code on a production server! The db_ID of my database is 8, which is used in the first statement. If you are playing along at home, replace this with your DB_ID.

DBCC FLUSHPROCINDB (8) DBCC FREEPROCCACHE DBCC DROPCLEANBUFFERS

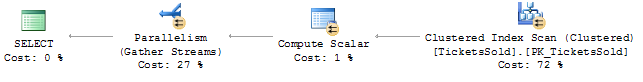

Now, when we display the estimated execution plan, we get:

We can see the clustered index scan has been replaced by index seeks of the indexes on Number2, Number3, and Number4. Why not the other fields and still no use of the index on BonusNumber? If you look at the tooltip for the Clustered Key Lookup, you’ll see the following:

You can see in the Predicates portion that SQL is filtering for Number1, Number5, and BonusNumber here. SQL Server has decided that it would be more efficient to do a lookup and filter the results than to perform seeks of three additional indexes and merge the results. Total cost for this query is 0.733865. The total execution time is:

(Note: you may have slightly different plans if you try this on your server. The choices the Query Optimizer makes are dependent on the distribution of the data in the indexes. Since we are using random numbers, your data distribution might be different than mine, resulting in different indexes being used. I’ve run this test using different sets of random numbers and the indexes used change slightly, but the plan always results in something similar – namely two indexes used then a scan.)

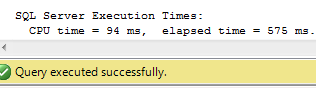

Pretty good for a first step. We dropped the cost by about 93%, the CPU time by 40 ms, and the execution time by about 70 ms. If we right click the database and choose Properties, then select Storage, we can see the indexes take up about 155 MB of disk space. Now let’s try using a single index on all five number fields.

First, let’s drop the five single field indexes and clear the caches again. Now, create an index containing the five number fields and, because I can sense where this is going, we’ll include the bonus number field as well:

CREATE NONCLUSTERED INDEX [IX_AllFields] ON [dbo].[TicketsSold] ( [Number1] ASC, [Number2] ASC, [Number3] ASC, [Number4] ASC, [Number5] ASC, [BonusNumber] ASC )WITH (PAD_INDEX = OFF, STATISTICS_NORECOMPUTE = OFF, SORT_IN_TEMPDB = OFF, IGNORE_DUP_KEY = OFF, DROP_EXISTING = OFF, ONLINE = OFF, ALLOW_ROW_LOCKS = ON, ALLOW_PAGE_LOCKS = ON, FILLFACTOR = 90) ON [PRIMARY] GO

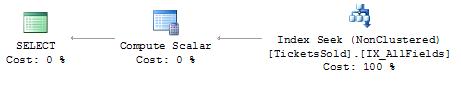

The new estimated execution plan is:

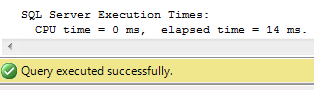

We can see we’ve replaced a lookup step, a loop, a merge, and three index seeks with a single index seek. Mousing over the final step shows the cost is this query is 0.0032832, another huge reduction from the already greatly reduced cost from the last test. Executing the actual search now gives the following execution times:

Wow.. A huge difference! And looking at the size the index occupies on disk, we see it’s only about 26 MB, also a huge reduction from the 155 MB of the five separate indexes, so in addition to improving performance, we’ve also reduced disk usage!

In retrospect, this is not a surprising result. If you want a query to run quickly, make an index that includes the fields in the WHERE clause of your query. In fact, if you’ve been playing along at home, you’ll noticed I’ve been cheating a bit. I haven’t been giving you all of the information SSMS returns with the estimated execution plan. He’s a larger view of the plan with no indexes:

Notice the line in green. When SSMS displays the estimated execution plan, it also displays a helpful message about a missing index. In effect, it’s telling you “Hey! You could make this query run faster if you added an index! And here’s what that index should be. And it will likely have a significant effect on improving performance.” (The “impact” number is a unitless number that is an estimate of how much performance will improve. The number only has meaning relative to the same number for other indexes, although a value of 98 generally means it’ll have a big impact.) Now, I will state that we are in a relatively “clean” environment, meaning this is a test server and only one query is hitting this table, so adding this index will have negligible impact on the system overall. A production environment is a different story. Maintaining indexes does incur some overhead, so before you start adding indexes willy-nilly to tables, you also need to take into account the performance impact the additional indexes might have – which is a function of how many inserts, deletes, and updates are going on in your table.

The Lesson

So we’ve taken steps to try out various indexing strategies and see how they perform. We ended up creating an index that should have been an obvious one to create, but at least we have the empirical evidence to prove we made the right choice. We also showed that “obvious” choices for an index are not always useful – our bonus number only index was never used at all.

The big take away from this experiment, however, lies in the one crucial decision we made: the decision to sort the group of five numbers before storing or searching for them. This allowed us to compare each number on the lottery ticket to each of the winning numbers in order. If we did not do this, our code would have to look at all 120 different combinations of five numbers. That would be a nightmare to code and to try to optimize indexes for! What we’ve shown is that the biggest performance improvement is often made by applying a little thought to how we do things. To put it another way, good design trumps faster hardware.